As a follow up on this post we would like to post an article with updated version of the scraper. There were a couple of comments with interest expressed for this topic, so we decided to:

- create a completely new scraper, based on symfony/console

- have a better code (it’s 2020 now, so we should use PSR container to manage dependencies, symfony/process to launch processes and composer commands to install the project as well as docker and docker-compose to run them

- the old code doesn’t work anymore as walmart changed their layout and now they are using react. So we need headless browser, and we will use PhantomJS for that.

Let’s start. First, as we mentioned above, we will use symfony/console (which is one of the most popular composer packages: more than 200M downloads according to packagist!) and we will probably need symfony/dom-crawler and symfony/css-selector to manipulate the DOM tree of the site as well as doctrine/dbal (for simplicity we will use just DBAL here, without Doctrine ORM) to save the scraped into to a MySQL table.

Let’s create our first composer.json version:

{

"autoload": {

"psr-4": {

"App\\": "src/"

}

},

"autoload-dev": {

"psr-4": {

"App\\Tests\\": "tests/"

}

},

"require": {

"guzzlehttp/guzzle": "^6.5",

"symfony/dom-crawler": "^5.0",

"symfony/css-selector": "^5.0",

"doctrine/dbal": "^2.10",

"symfony/console": "^5.0"

}

}

Let’s launch

composer install

and create a basic command that will be the only one in our project and that will launch the scraper:

<?php /** * Main command */ namespace App\Command; use Symfony\Component\Console\Command\Command; use Symfony\Component\Console\Input\InputInterface; use Symfony\Component\Console\Output\OutputInterface; class ScraperCommand extends Command { // the name of the command (the part after "bin/console") protected static $defaultName = 'app:run-scraper'; protected function configure() { $this ->setDescription('Starts parsing') ->setHelp('This command launches the parser'); } protected function execute(InputInterface $input, OutputInterface $output) { $output->write('Hello'); return 0; } }

And let’s add a symfony bootstrap application.php file to our project bin folder with the following contents:

<?php /** * Application entrypoint script */ require __DIR__ . '/../vendor/autoload.php'; use Symfony\Component\Console\Application; use App\Command\ScraperCommand; $application = new Application(); $application->add(new ScraperCommand()); $application->run();

That’s it, now if we run php bin/application app:run-scraper, we should see ‘hello’ in the output. So we created a skeleton for our future console command, but it doesn’t do anything yet.

Let’s create a docker-compose.yaml file for more convenience in launching our app and to have all advantages of docker usage (isolation, same environment, etc…) :

version: '3.4'

services:

scraper:

image: bytes85/php7.4-cli

volumes:

- ./:/scraper

entrypoint: ["php", "-d", "memory_limit=-1", "/scraper/bin/application", "app:run-scraper"]

It’s just one service mounting out source as /scraper into the container and running the command.

Now it’s time to think about the dependencies of our application (console command), create symfony DI container configuration:

# app/config/services.yaml # Some retrievable parameters parameters: base_url: 'https://www.walmart.com' all_departments_url: '/all-departments' services: # default configuration for services in *this* file _defaults: autowire: true # Automatically injects dependencies in your services. autoconfigure: true # Automatically registers your services as commands, event subscribers, etc. Symfony\Component\DependencyInjection\ParameterBag\ParameterBagInterface: class: 'Symfony\Component\DependencyInjection\ParameterBag\ParameterBagInterface' factory: ['@service_container', 'getParameterBag' ] App\Command\ScraperCommand: class: 'App\Command\ScraperCommand' arguments: $parameters: '@Symfony\Component\DependencyInjection\ParameterBag\ParameterBagInterface' $name: 'app:run-scraper' Monolog\Handler\StreamHandler: arguments: $stream: 'php://stderr' Monolog\Logger: arguments: $name: 'app' calls: - [ pushHandler, ['@stderr.monolog.handler'] ]

and convert our command in bin/application to a ContainerAwareCommand:

<?php /** * Application entrypoint script */ require __DIR__ . '/../vendor/autoload.php'; use Symfony\Component\Console\Application; use Symfony\Component\Config\FileLocator; use Symfony\Component\DependencyInjection\ContainerBuilder; use Symfony\Component\DependencyInjection\Loader\YamlFileLoader; use App\Command\ScraperCommand; use Symfony\Component\Console\CommandLoader\ContainerCommandLoader; $application = new Application(); $containerBuilder = new ContainerBuilder(); $loader = new YamlFileLoader($containerBuilder, new FileLocator(__DIR__)); $loader->load(__DIR__ . '/../config/services.yaml'); $commandLoader = new ContainerCommandLoader( $containerBuilder, [ 'app:run-scraper' => ScraperCommand::class, ] ); $application->setCommandLoader($commandLoader); $application->run();

Now that Walmart website is a react-powered one, we’re able to scrape it only with some headless browser like PhantomJS. There is a convenient package for PHP: jakoch/phantomjs-installer that downloads PhantomJS binary to your vendor bin folder and generates a class with the path to it. Let’s add this dependency, add it to services.yaml of symfony console command:

PhantomInstaller\PhantomBinary:

class: 'PhantomInstaller\PhantomBinary'

App\Service\PhantomJS:

class: 'App\Service\PhantomJS'

arguments:

$binary: '@PhantomInstaller\PhantomBinary'

$crawler: '@Symfony\Component\DomCrawler\Crawler'

$baseUrl: '%baseUrl%'

and inject it into the class constructor of our command:

App\Command\ScraperCommand:

class: 'App\Command\ScraperCommand'

arguments:

$parameters: '@Symfony\Component\DependencyInjection\ParameterBag\ParameterBagInterface'

$logger: '@Monolog\Logger'

$phantomJS: '@App\Service\PhantomJS'

$connection: '@Doctrine\DBAL\Connection'

$name: 'app:run-scraper'

/** * @var PhantomJS */ private PhantomJS $phantomJS; public function __construct(ParameterBagInterface $parameters, Logger $logger, PhantomJS $phantomJS, string $name = null) { parent::__construct($name); $this->parameters = $parameters; $this->logger = $logger; $this->phantomJS = $phantomJS; }

Let’s also create a service called PhantomJS that will contain all the crawling logic:

<?php namespace App\Service; use PhantomInstaller\PhantomBinary; use Symfony\Component\DomCrawler\Crawler; use Symfony\Component\Process\Process; class PhantomJS { /** * @var string */ public const PHANTOMJS_SOURCE_SCRIPT = 'phantomjs/getsource.js'; /** * @var PhantomBinary */ private PhantomBinary $binary; /** * @var string */ private string $url; /** * @var string */ private string $baseUrl; /** * @var Crawler */ private Crawler $crawler; /** * PhantomJS constructor. * * @param PhantomBinary $binary * @param Crawler $crawler * @param string $baseUrl */ public function __construct( PhantomBinary $binary, Crawler $crawler, string $baseUrl) { $this->binary = $binary; $this->crawler = $crawler; $this->baseUrl = $baseUrl; } /** * @return string */ public function getUrl(): string { return $this->url; } /** * @param string $url */ public function setUrl(string $url): void { $this->url = $url; } /** * Returns array of hrefs => text for the given filter from the page * * @param string $selector * * @return array */ public function getLinks(string $selector) : array { $process = new Process( [ $this->binary::getBin(), realpath(__DIR__ . '/../../') . DIRECTORY_SEPARATOR . self::PHANTOMJS_SOURCE_SCRIPT, $this->baseUrl . $this->url ] ); $process->mustRun(); $this->crawler->add($process->getOutput()); return $this->crawler->filter($selector)->extract(['href', '_text']); } }

It contains the method to get all the categories’ links, so our execute() method of the console command would look like:

protected function execute(InputInterface $input, OutputInterface $output) { $this->phantomJS->setUrl($this->parameters->get('allDepartmentsUrl')); $departments = $this->phantomJS->getLinks('div.alldeps-DepartmentLinks-columnList a.alldeps-DepartmentLinks-categoryList-categoryLink'); return 0; }

Now if we launch it $departments variable will contain the list of all category names with their links. So we need to loop through them to get the items:

protected function execute(InputInterface $input, OutputInterface $output) { $this->phantomJS->setUrl($this->parameters->get('allCategories')['url']); $categories = $this->phantomJS->getCategoryLinks($this->parameters->get('allCategories')['linksSelector']); foreach ($categories as $category) { list($categoryUrl, $categoryName) = $category; $page = 1; $allItems = $uniqueness = []; do { $output->writeln('Category name: ' . $categoryName . '; Category URL: ' . $categoryUrl . '; page: ' . $page); $this->phantomJS->setUrl($categoryUrl . '?page=' . $page); $items = $this->phantomJS->getItems( $this->parameters->get('items')['linksSelector'], $this->parameters->get('items')['card'] ); $key = md5(json_encode($items)); if (in_array($key, $uniqueness)) { break; } $uniqueness[] = $key; $allItems = array_merge($items,$allItems); $output->writeln('Found ' . count($items) . ' products'); $page++; } while(count($items)); var_dump($allItems);die(); } return 0; }

So we expect $allItems to contain all the items from one category on the first pass of the loop. We also need to add missing methods to the service:

/** * Returns array of items for the given page * * @param string $selector for general item card * @param array $cardSelectors for properties of an item * * @return array */ public function getItems(string $selector, array $cardSelectors): array { $this->crawler->clear(); $this->crawler->add($this->getPageSource()); return $this->crawler->filter($selector)->each(function(Crawler $node) use($cardSelectors) { return $this->getItemDetails($node, $cardSelectors); } ); } /** * Returns an array for an item with all required params (title, price, ...) * * @param Crawler $node * @param array $cardSelectors * * @return array */ private function getItemDetails(Crawler $node, array $cardSelectors): array { return [ 'img' => $node->filter('img')->extract(['src']), 'title' => $node->filter($cardSelectors['title'])->extract(['_text']), 'price' => $node->filter($cardSelectors['price'])->extract(['_text']), 'soldBy' => $node->filter($cardSelectors['soldBy'])->extract(['_text']), 'rating' => $node->filter($cardSelectors['rating'])->extract(['_text']), ]; }

Now we’re ready to launch the scraper, but we also need a data storage to save the item data. For simplicity we will use here MySQL database with just one table called “products” with the following columns: “title”, “price”, “soldBy”, “rating”. We’re not going to build proper data structure here, neither we perform any data validation/filtering, we aren’t also going to use migrations. This is all possible, but out of scope of this article.

Let’s add Doctrine DBAL dependency to the DI container:

App\Command\ScraperCommand: class: 'App\Command\ScraperCommand' arguments: $parameters: '@Symfony\Component\DependencyInjection\ParameterBag\ParameterBagInterface' $logger: '@Monolog\Logger' $phantomJS: '@App\Service\PhantomJS' $connection: '@Doctrine\DBAL\Connection' $name: 'app:run-scraper' .... Doctrine\DBAL\Connection: factory: ['Doctrine\DBAL\DriverManager', 'getConnection'] arguments: - dbname: walmart user: root password: password host: db driver: pdo_mysql

and add it to the command constructor:

/** * @var Connection */ private $connection; public function __construct(ParameterBagInterface $parameters, Logger $logger, PhantomJS $phantomJS, Connection $connection, string $name = null) { parent::__construct($name); $this->parameters = $parameters; $this->logger = $logger; $this->phantomJS = $phantomJS; $this->connection = $connection; }

and let’s loop through $allItems once they are collected for one category and insert them into the products table:

foreach ($allItems as $item) { $queryBuilder = $this->connection->createQueryBuilder(); $queryBuilder ->insert('products') ->values( [ 'title' => ':title', 'price' => ':price', 'soldBy' => ':soldBy', 'rating' => ':rating' ] ) ->setParameter(':title', $item['title'][0]) ->setParameter(':price', $item['price'][0]) ->setParameter(':soldBy', $item['soldBy'][0]) ->setParameter(':rating', $item['rating'][0]) ; $queryBuilder->execute(); }

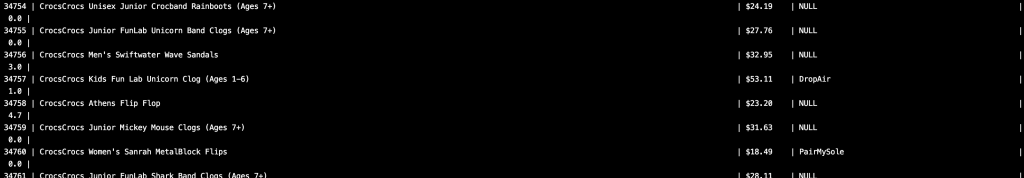

Now we’re done. If we launch the command via docker-compose or Makefile we should see records inserted into our table:

The code is available on our github repo.