Sometimes it happens that there is a complex website that is can’t be parsed with so called “regular” cURL + DOM xpath technique. People tend to protect their data with Javascript techniques, nowadays there are pure-Javascript sites popular, and, as you know, one can’t interpret JS using PHP or any other language you’re using for scraping (unless you scrape with nodejs, but I haven’t tried that yet).

But what if we run scraping on a machine having not only CLI (command line interface), but that is capable of launching graphic apps, for example chrome. You’d say it’s impossible to launch a browser from PHP. Yes, it is! And I’m going to explain how to do that and how to automate it to scrape protected data as you can not only launch it, but also automate, emulate user behaviour (clicks, hovers, etc…), so you can defeat any sophisticated data protection techniques. So let’s get started.

Prerequisites:

[list style=”upper-alpha tick”]

[list_item]1. Download php-webdriver-bindings latest version from this page, unpack the contents to a directory that we’re going to include in our script later[/list_item]

[list_item]2. We will also need a java VM (or JRE) installed on our machine, as we’d need Selenium test server. If you would like to test other, non-Java, versions of it – you’re welcome, but in this tutorial we will use the Java one. Here is the link to download: link. You need selenium-server-standalone-x.xx.jar. Download it also to your project directory. NOTE: I tried the latest one 2.38 as of today and it did not work for me, so below I will base on 2.29 version. You can find it here[/list_item]

[list_item]3. Download Chrome driver (as I know, there is also a Firefox driver) for Selenium here, unpack it to your project folder.[/list_item]

[/list]

Let’s write a .bat (or bash/your favourite shell) file to launch the Selenium server with chrome driver automatically, so that it’s not a hassle every time we want to scrape:

java -jar selenium-server-standalone-2.29.0.jar -Dwebdriver.chrome.driver="chromedriver.exe"

Place there your Selenium driver version and the path to chromedriver.exe (step 3 in Prerequisites)

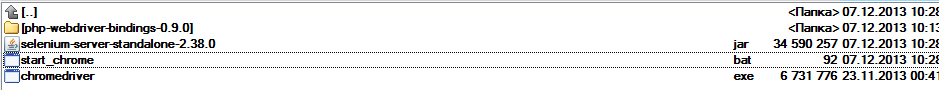

So the folder with all prerequisites should look like:

Launch the .bat/sh file we just created. If you did everything right, the output should be as follows:

C:\project\scraping>java -jar selenium-server-standalone-2.29.0.jar -Dwebdriver.chrome.driver="chromedriver.exe" Dec 07, 2013 10:55:50 AM org.openqa.grid.selenium.GridLauncher main INFO: Launching a standalone server Setting system property webdriver.chrome.driver to chromedriver.exe 10:55:52.068 INFO - Java: Oracle Corporation 24.45-b08 10:55:52.070 INFO - OS: Windows 7 6.1 x86 10:55:52.078 INFO - v2.29.0, with Core v2.29.0. Built from revision 58258c3 10:55:52.190 INFO - RemoteWebDriver instances should connect to: https://127.0.0.1:4444/wd/hub 10:55:52.191 INFO - Version Jetty/5.1.x 10:55:52.191 INFO - Started HttpContext[/selenium-server/driver,/selenium-server/driver] 10:55:52.193 INFO - Started HttpContext[/selenium-server,/selenium-server] 10:55:52.194 INFO - Started HttpContext[/,/] 10:55:52.219 INFO - Started org.openqa.jetty.jetty.servlet.ServletHandler@fbfa2 10:55:52.219 INFO - Started HttpContext[/wd,/wd] 10:55:52.224 INFO - Started SocketListener on 0.0.0.0:4444 10:55:52.224 INFO - Started org.openqa.jetty.jetty.Server@1412332

Great! So we have our Selenium server with Chrome driver listening on all interfaces on 4444 port. It’s time now to do some PHP. Create your main .php sraping file and include php-web-bindings framework there:

<?php require_once dirname(realpath(__FILE__)) . DIRECTORY_SEPARATOR . "php-webdriver-bindings-0.9.0". DIRECTORY_SEPARATOR ."phpwebdriver". DIRECTORY_SEPARATOR ."WebDriver.php"; error_reporting(E_ALL); ini_set('display_errors',1); date_default_timezone_set('Europe/Moscow');

Let’s find a site to scrape. For example, something like this: https://www.handhautosalesgalax.com/newandusedcars.aspx . It is writted on ASP and it has paging, which is hard to deal with in cURL because it just does postback with evenstate and viewstate parameters where everything is coded, and we don’t want to deal with it in cURL. So it’s not that easy like https://site.com/?page=5. Try it youself, try to capture HTTP requests and see the data. But using this way we don’t care much about it.

So:

<?php require_once dirname(realpath(__FILE__)) . DIRECTORY_SEPARATOR . "php-webdriver-bindings-0.9.0". DIRECTORY_SEPARATOR ."phpwebdriver". DIRECTORY_SEPARATOR ."WebDriver.php"; error_reporting(E_ALL); ini_set('display_errors',1); date_default_timezone_set('Europe/Moscow'); handhautosalesgalax(); function handhautosalesgalax() { // instantiate WebDriver php class $webdriver = new WebDriver("localhost", "4444"); // connect to Selenium server - see above, it should be running $webdriver->connect("chrome"); $cars = array(); $site = 'https://www.handhautosalesgalax.com/newandusedcars.aspx'; // navigate to URL $webdriver->get($site); // find 1st page element in paging by xpath $element = $webdriver->findElementBy(LocatorStrategy::xpath, '//ul[contains(@class,"dnnmega")]/li//span[text()="Inventory"]'); if ($element) { // if found , click it $element->click(); } }

As a result you should see a chrome session launching from your PHP code, navigating to the URL above and clicking on 1st page item in paging area. Well done! You have the access to any data on the page now with xpath using your browser, so no JS techniques will prevent you from automatic scraping, you appear as a real legit user there on the site! Now just a few tricks you may need while using this approach.

1. If you assign something from $webdriver->findElementsBy() into a variable, note that it is saved there by reference, so if you later use $webdriver same variable to navigate to different page (than you used findElementsBy() on), the elements will be lost. So you’d need either call clone on the var – I haven’t tried that and absolutely not sure it would work, or work on the results, close the current webdriver session and open a new one.

2. If you try searching for an element and the page is not fully loaded, so DOM tree hasn’t been yet constructed, you will not find the element. To work around this, I’m using the following, not really sophisticated code, but still it works:

$element = false; do { try { $element = $webdriver->findElementBy(LocatorStrategy::xpath, '//ul[contains(@class,"dnnmega")]/li//span[text()="Inventory"]'); } catch(Exception $e) { sleep(1); } } while(!$element);

So effectively, we wait till it appears on the page. Beware you may end up with infinite loop if you specified your xpath query wrong.

You may create a function something like waitForLoad($xpath)

That’s it, happy scraping!

Hello

can you please help me with how to scrap data from site with selenium php. most likely i want to search all the div tag from current page using css selector and get text from that all div tag.

thanks

Hi, thanks for your interest. Did you try something like $elements = $webdriver->findElementBy(LocatorStrategy::xpath, ‘//div’); and then foreach($elements as $element) { echo $element->getText(); }